By Garland Joseph

Qualitative research aims to understand real-world problems and can offer concrete solutions.

The Active Aging Research Team (AART) conducts qualitative research through interviews to gain a deeper understanding of how programs like Choose to Move impact participants’ quality of life. Many interviewees come from diverse linguistic communities, including Punjabi, Persian, and Chinese. The team recognizes the importance of capturing these experiences to gain a comprehensive understanding of how these programs impact participants’ well-being. But there is one problem: to use these interviews for research, they must be translated into English. Unfortunately, professional translations are both costly and time-consuming. However, if only English-language interviews are used, the data reflect a partial and skewed picture of the people AART serves. This summer, AART set out to explore how AI could be implemented thoughtfully to enhance, rather than undermine, qualitative research and provide a viable transcription and translation solution.

How do researchers incorporate AI into the research life cycle to support qualitative data collection and analysis?

Like any new technology, AI comes with both positive and negative risks. The rise of deep learning in large language models (LLMs) has enabled rapid and cost-effective production of translations (1). While this opens exciting opportunities for expanding data collection and analysis, AI is not neutral and comes with its challenges. We explore these challenges and provide suggested solutions!

Challenge: Data Privacy

One primary concern is that many third-party vendors may scrape or store data entered into their models, potentially exposing sensitive information from the interviews. Vendors may also use this data for product development without participant consent (7). AART sought out vendors that would protect interviewees’ information and fit within UBC research ethics requirements.

Solution #1: Azure Speech Studio

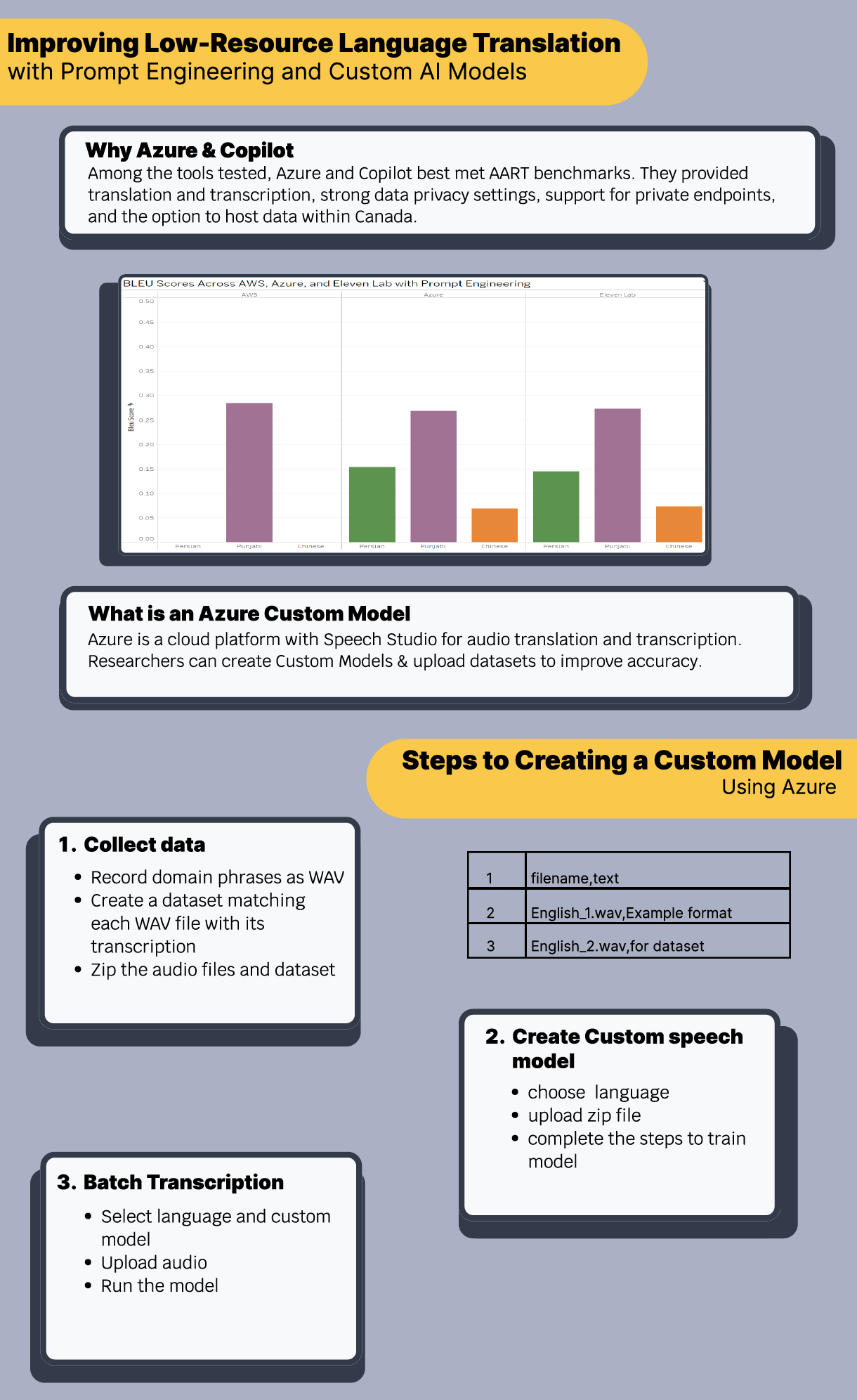

The team identified Azure, a cloud computing platform that offers services such as Speech Studio, which provides audio translation and transcription. Azure states that it does not collect client data to train its models (4). UBC research ethics has determined it is critical for research teams to use a private endpoint when working with AI, which ensures that data remains secure and inaccessible to outsiders. Azure allows users to create a private endpoint when using Speech Studio (4).

Challenge #2: Algorithmic biases

Low-resource languages often have limited datasets available to train AI models on. The lack of data can lead to algorithmic bias, where translations may be inaccurate, miss cultural or linguistic nuances, or even produce hallucinations (outputs where the model invents content) (3,5). Because many vendors do not make their datasets open source, it is often impossible to trace how a model arrived at its translation decisions (2). Research has shown, however, that outputs improve significantly when models are trained on linguistic and context-specific datasets (6). Scholars argue that AI translation models should be built and trained by members of the communities whose languages they represent to improve accuracy and reduce harmful outputs.

Solution: Upload custom datasets for training

One way to address algorithmic bias is to use models that allow researchers to upload custom datasets for training (5). Azure offers this functionality. While users cannot yet train its text-to-speech translation, they can train custom transcription models. This creates an opportunity for AART and other research teams to develop models that better capture cultural and linguistic nuances, as well as health-related topics. Some researchers also argue that participants themselves should have the option to contribute to model development, increasing both transparency and the quality of outputs.

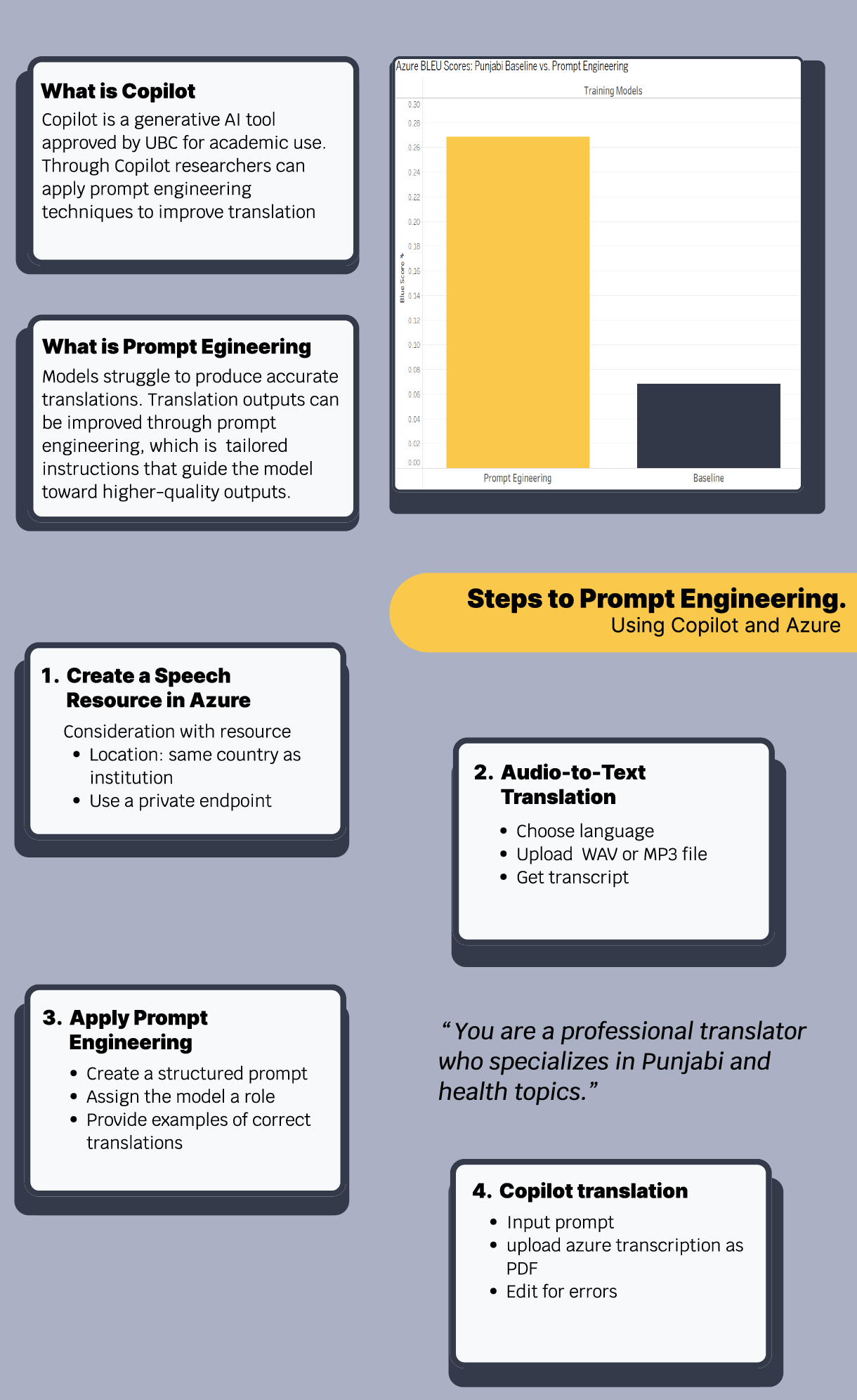

Solution: Prompt engineering and Copilot

Creating a dataset and training a model can be time-intensive, and speech-to-text translation often yields low-quality outputs. However, there are other options to improve AI translation. One promising method is prompt engineering, which involves designing tailored instructions to guide the model toward high-quality results (6). There are many ways to create an effective prompt, including assigning the model a role, providing important context, and using a structured pattern. Researchers have demonstrated that providing examples for the AI to reference is one of the most effective ways to significantly improve translation output (6).

While many generative AI tools exist, the University of British Columbia has approved Copilot for research use. Copilot is a strong option for research teams because it can enhance translation outputs and does not utilize user inputs or personal information to train itself without explicit permission (8). In testing, using Copilot with prompt engineering dramatically improved translation quality: the test interview achieved a BLEU score of 0.27, compared to Azure’s audio-to-text translation, which scored only 0.07. BLEU is a standard metric for evaluating the similarity between a machine translation nd to human translation.

Conclusion

Using AI is not easy. It is messy and complicated, much like qualitative research itself. AI is not a static or neutral entity but a tool shaped by the people who develop and train it. It inevitably reflects the biases of its creators and the data on which it was taught. AART’s long history with qualitative research has prepared the team to navigate the nuances of AI thoughtfully. Many of the same ethical considerations apply, such as obtaining consent for the use of data, ensuring transparency in data collection, and carefully addressing cultural differences to minimize bias in the work. Research teams can use data ethically to build models in collaboration with linguistic communities, ensuring that cultural and linguistic nuances are preserved and respected. When designed with care, these models can help bridge language barriers and ensure that all participants’ voices are represented in AART’s research.

References

1. Abdul-Mageed M. How Deep Learning Actually Learns About Language [Internet]. Medium. 2021 [cited 2025 July 16]. Available from: https://mumageed.medium.com/how-deep-learning-actually-learns-about-language-b3f95d752acd

2. Andrade Preciado JS, Sánchez Ramírez HJ, Priego Sánchez Ángeles B, Gutiérrez Pérez EE. Ethical challenges in AI-assisted translation. Ethaica. 2025. doi:10.56294/ai2025151.

3. Arora A, Barrett M, Lee E, Oborn E, Prince K. Risk and the future of AI: Algorithmic bias, data colonialism, and marginalization. Information and Organization. 2023 Sept 1;33(3):100478.

4. Azure Document Intelligence: Is customer data used to train models? - Microsoft Q&A [Internet]. [cited 2025 Sept 28]. Available from: https://learn.microsoft.com/en-us/answers/questions/1864387/azure-document-intelligence-is-customer-data-used

5. Guerreiro NM, Alves DM, Waldendorf J, Haddow B, Birch A, Colombo P, et al. Hallucinations in Large Multilingual Translation Models. 2023;1500–17.

6. Khoboko PW, Marivate V, Sefara J. Optimizing translation for low-resource languages: Efficient fine-tuning with custom prompt engineering in large language models. Machine Learning with Applications. 2025 June 1;20:100649.

7. Lion KC, Lin YH, Kim T. Artificial Intelligence for Language Translation: The Equity Is in the Details. JAMA. 2024 Nov 5;332(17):1427–8.

8. tonyafehr. FAQ for Copilot data security and privacy - Dynamics 365 [Internet]. [cited 2025 Sept 28]. Available from: https://learn.microsoft.com/en-us/dynamics365/faqs-copilot-data-security-privacy